Dear friends nowadays more and more popular is becoming the concept of self driving cars. Maybe you know that the google and tesla are working hard to make a driverless cars more sophisticated. Currently have cars that have intelligent cruise control, parallel parking algorithms and automatic overtaking. But before the idea of self-driving cars is widely accepted, a crucial dilemma of algorithmic morality must be resolved. How should the car be programmed to act in the event of an unavoidable accident? Should it minimize the loss of life, even if it means sacrificing the occupants, or should it protect the occupants at all costs? Should it choose between these extremes at random?

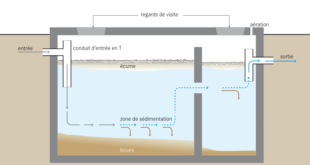

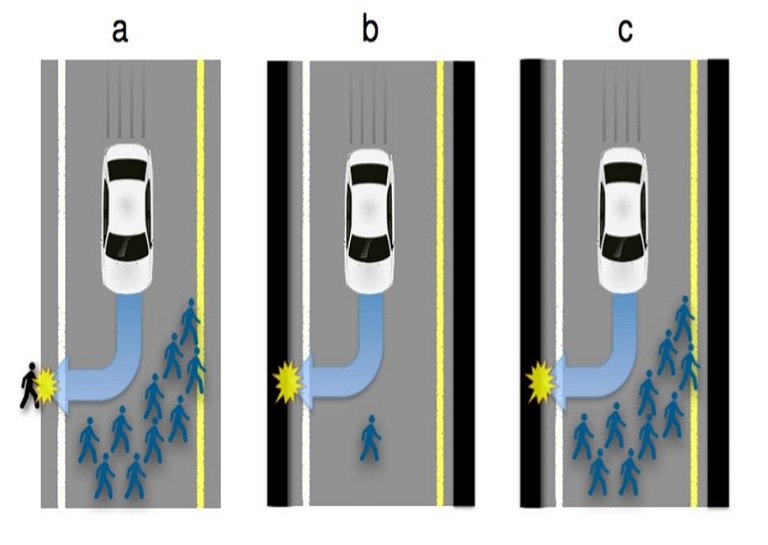

For better understanding the problem there is one situation – scenario that you should see below.

The answers to these ethical questions that we have mentioned above are important because they could have a big impact on the way self-driving cars are accepted in society. Who would buy a car programmed to sacrifice the owner? We all know that the concept of self driving cars become reality because we need to find a way to minimize, if not eliminate, these kinds of incidents.

So the main questions and answers here are too complicated for me but i would like to share them with you.

One way to approach this kind of problem is to act in a way that minimizes the loss of life. By this way of thinking, killing one person is better than killing 10.

But that approach may have other consequences. If fewer people buy self-driving cars because they are programmed to sacrifice their owners, then more people are likely to die because ordinary cars are involved in so many more accidents. The result is a Catch-22 situation.

In order to answer this question, Jean-Francois Bonnefon of the Toulouse School of Economics in France and a group of colleagues performed a study. The group utilized the science of experimental ethics – it poses ethical questions to a large number of people and gets their feedback in return. The idea behind this is that for a large enough population, there might be something interesting in the answers provided. They asked a similar questions to the scenario above and people were asked about what the outcome should be.

The results of this study shows Generally, people were fine with the idea of self-driving cars minimizing the number of deaths by crashing into a wall and killing the driver. This utilitarian perspective stood out but nonetheless, people were not completely receptive of the whole idea.

And from these study we can learn that there are so much more to be done before a fully realizable self-driving car can hit the road. This also raises other questions, for example, when an accident happens, who is to blame? Maybe the computer

If you like to see the whole study check our here via interestingengineering.com

World inside pictures Collect and share the best ideas that make our life easier

World inside pictures Collect and share the best ideas that make our life easier